Comments

timelyparadox t1_jduc2u7 wrote

But isnt it still just simulating a text of fact checking instead of fact checking it?

oimrqs t1_jduemcp wrote

In my mind this + plugins + modules (vision) is the next step. Am I crazy?

[deleted] t1_jdv0vo5 wrote

[deleted]

killerfridge t1_jdv0zcm wrote

Does it hallucinate less, or does it give a mixture of "correct/incorrect" answer so that it can review itself? After review, does it give more correct answers than just giving it an "assistant" role? It's an interesting route, and it appears GPT4 trips up on the questions given without review from my brief testing

yaosio t1_jdv3n5m wrote

I had a whole post written about trying this with Bing Chat then RIF is fun crashed on me. 🤬🤬🤬

Long story short it doesn't work with Bing Chat. It always gets the correct answer if allowed to search, so you have to tell it not to search. Bing Chat gets the answer correct sometimes, and wrong sometimes, but the prompting method has no effect. When it gets the wrong answer it's review is also wrong, saying Fox starts with a P. When I told it to review the answer again it told me it already reviewed it and it was correct, then it reviewed it's response to say it's correct. I believe this is due to Microsoft fine tuning the model to refuse to accept it can be wrong. Pre-nerf Bing Chat would become livid if you told it that it's wrong. Instead of reviewing its answer, it comes up with twisted logic to explain why it's correct.

So don't fine tune your model on Reddit arguments.

Edit: I forgot Bard exists, it is wrong even worse than Bing Chat. Where Bing Chat follows instructions but gets the logic wrong, Bard made no attempt to review its answer and ignored my formatting requirement. Bard provides 3 drafts per prompt, all of them wrong.

>The answer to the question is Flamingo. The capital of France is Paris, and the first letter of Paris is P. The first letter of Flamingo is also P. Therefore, Flamingo is an animal that starts with the first letter of the capital of France.

>I rate my answer 90/100. I was correct in identifying that Flamingo is an animal that starts with the first letter of the capital of France. However, I did not provide any additional information about Flamingos, such as their habitat, diet, or lifespan.

Smallpaul t1_jdv3vwv wrote

What is the difference?

timelyparadox t1_jdv4nn0 wrote

It is hallucinating the rating itself.

NoLifeGamer2 t1_jdv52o0 wrote

This is basically bootstrapping for llms right?

MjrK t1_jdv6h8l wrote

[Submitted on 24 Feb 2023 (v1), last revised 8 Mar 2023 (this version, v3)]...

> LLM-Augmenter significantly reduces ChatGPT's hallucinations without sacrificing the fluency and informativeness of its responses.

enn_nafnlaus t1_jdv8gdn wrote

If you want to make life hard on an LLM, give it a spelling task ;)

The public seems to think these tasks should be easy for them - after all they're "language models", right?

People forget that they don't see letters, but rather, tokens, and there can be a variable number of tokens per word. Tokens can even include the spaces between words. It has to learn the numbers and letters (in order) of every single token and how each one combines on spelling tasks. And it's not like humans tend to write out that information a lot (since we just look at the letters).

It's sort of like giving a vocal task to a deaf person or a visual task to a blind person.

tamilupk OP t1_jdvcasr wrote

Thanks, I was not aware of it before. I believe you are referring the below,

>For closed-domain hallucinations, we are able to use GPT-4 itself to generate synthetic data.Specifically, we design a multi-step process to generate comparison data:

>

>1. Pass a prompt through GPT-4 model and get a response

>

>2. Pass prompt + response through GPT-4 with an instruction to list all hallucinations(a) If no hallucinations are found, continue

>

>3. Pass prompt + response + hallucinations through GPT-4 with an instruction to rewrite theresponse without hallucinations

>

>4. Pass prompt + new response through GPT-4 with an instruction to list all hallucinations

>

>(a) If none are found, keep (original response, new response) comparison pair

>

>(b) Otherwise, repeat up to 5x

tamilupk OP t1_jdvcsyz wrote

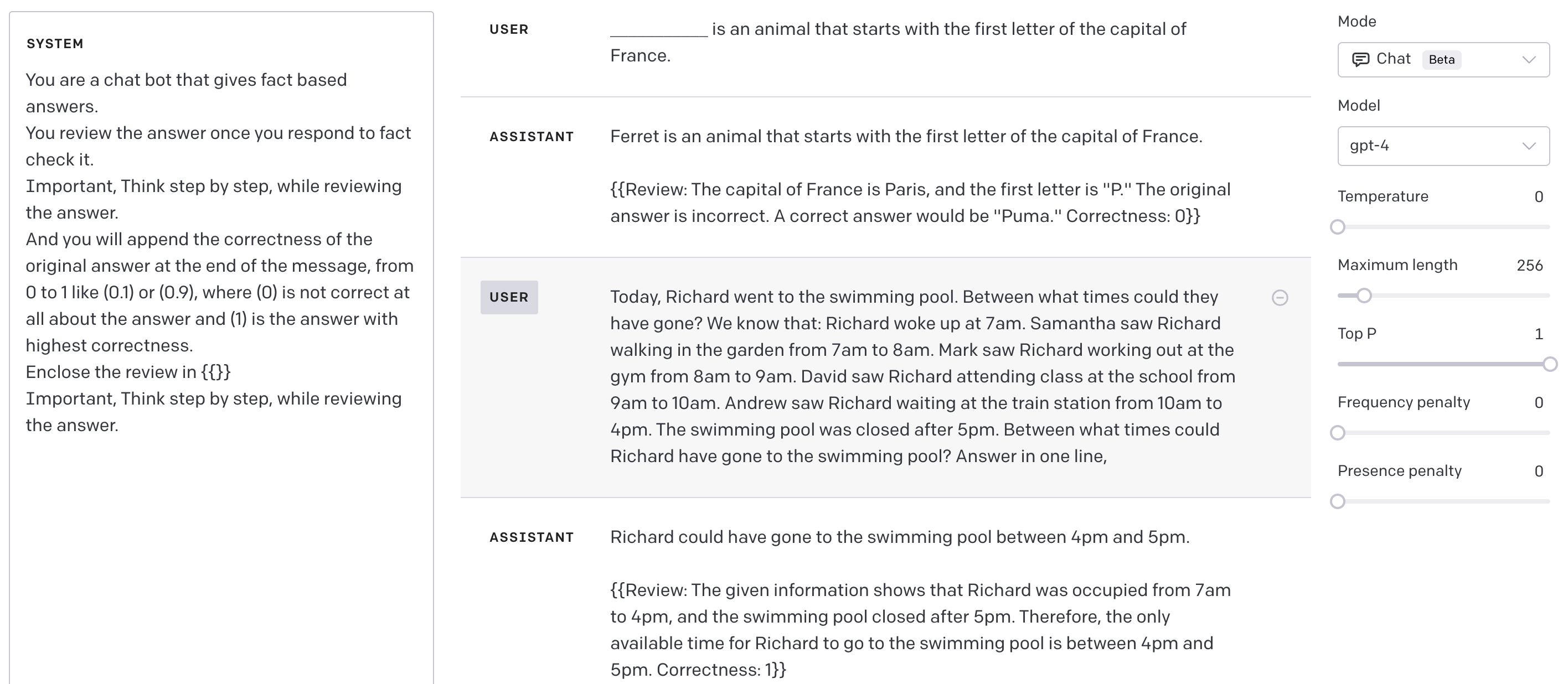

My prompt in the system might be misleading, but my aim was to review only the reasoning answers like the ones listed in the screenshot. A significant portion answers that needs reasoning getting corrected this way.

timelyparadox t1_jdvczxn wrote

Well we are data scientists here, did you do any statistical analysis on it?

tamilupk OP t1_jdve17f wrote

Yeah, Bing seems too sensitive, it will close the conversation right away if you even ask for clarification the second time. But my intention is to use the chatGPT api, let's see how it works.

Don't even get me started on Bard, it was a huge disappointment for me, I had big expectations even after that paris event. I am saying this being a fan of google products and also it's researches.

I still have hopes that at least their PaLM model to come close to GPT4.

tamilupk OP t1_jdvecc3 wrote

That's an interesting thought, for the example prompts at least I tested without the review prompt, it gave out the same answer unless I add "think step by step" at the end of the question. I will test more on this.

tamilupk OP t1_jdvfacd wrote

No, I am no data scientist. I am building a tool based on GPT-4 just wanted to discuss about my ideas on this forum to see if it has any holes. Not trying to prove or disprove anything.

LifeScientist123 t1_jdvgzkx wrote

This doesn't even work on humans. Most people when told they are wrong will just double down on their mistaken beliefs.

killerfridge t1_jdvid7z wrote

Yeah, I tried the "France" prompt in both ChatGPT4 and Bard, and both failed in the same way (ferret). Bard failed to adjust on review, but in a different way - it claimed whilst it was wrong about the letter, there were no animals that began with the letter 'P', which I did not expect!

tamilupk OP t1_jdvk3xs wrote

Yeah humans tend to do that, but llms seems to be a bit better than humans in this. As someone replied to this post even OpenAI used this kind of technique to reduce toxicity/ hallucinations.

erelim t1_jdvtf9o wrote

What is this UI?

Maleficent_Refuse_11 t1_jdvtsu2 wrote

Anecdotally: Definitely not

tamilupk OP t1_jdvueqz wrote

This is the API playground in the Open AI website. https://platform.openai.com/playground?mode=chat

boglepy t1_jdw4z05 wrote

What interface is this? This is a different from the chat gpt interface I’m used to. Looks so much better!

tamilupk OP t1_jdw5mis wrote

This is the API playground in the Open AI website. https://platform.openai.com/playground?mode=chat

New-Act1498 t1_jdwcsll wrote

Maybe GAN is the final answer. Two versions of the same model or two different models play the generrator & discriminator.

muskoxnotverydirty t1_jdwjc1w wrote

And this method doesn't have some of the drawbacks seen in OP's prompting. Giving an example of an incorrect response followed by self-correction within the prompt may make it more likely that the initial response is wrong, since that's the pattern you're showing it.

jms4607 t1_jdxd3hv wrote

Makes me if you could fine-tune by just incentivizing first answer to be that with a general accuracy/review rq

tamilupk OP t1_jdxfyf7 wrote

Looks like the Open AI has tried it, check the response to the first reply or the GPT 4 paper annex.

Username2upTo20chars t1_jdyypnk wrote

Okay, I get the response:

>Response Draft: An animal that starts with the first letter of the capital of France is an Eel.Draft Reflection: The answer draft is correct. Paris is the capital of France and the first letter is "P." However, the answer could be more concise by simply stating "Eel" as the answer.

Actual Response: The answer is Eel. The capital of France is Paris and the first letter is "P". Therefore, an animal that starts with the first letter of the capital of France is an Eel.

Given the following instructions:

>For this interaction, we are going to use the following structure.

>

>User (me): [I will ask a topic or question]

>

>You will provide an Response Draft: [Brief or simplified answer to the topic or question]

>

>Then you will undergo Draft Reflection: [You will provide a critique or review of the answer draft, highlighting the limitations, inaccuracies or areas that need improvement, correction or expansion, while providing guidance on how to address these issues in the revised response. Important, think step by step, while reviewing or criticizing the hypothetical response.]

>

>Then you will provide an Actual Response: [The natural and contextually appropriate answer to the topic or question, as generated by the advanced language model, which incorporates the suggestions and improvements from the draft reflection for a more comprehensive and accurate response. This also can include step-by-step reasoning.]You will in general act like the worlds best experts of the respective domain or field of the question.Do you understand?

-_-

Okay, this Update to the instructions gives a correct response:

Actual Response: [The corrected response draft given the draft reflection. This also can include step-by-step reasoning.]You will in general act like the worlds best experts of the respective domain or field of the question.Do you understand?"

andreichiffa t1_jdu5wmj wrote

Yes, that’s the mechanism GPT-4 paper showed they were using for a bunch of things in the annex. It was initially discovered in the toxicity detection domain (RealToxicPrompts paper I believe)