Comments

svd- t1_j840c7q wrote

Genuinely interested in learning how to build such things, can you explain how did you build this extension and linked it to chat gpt from system design perspective.

Trakeen t1_j841ust wrote

I really like chatgpt but i typically find the abstract good enough to summarize the paper

niclas_wue t1_j842pyo wrote

Hey, great idea, looks very interesting. Do you use the abstract as an input or do you actually parse the paper? I built something quite similar: http://www.arxiv-summary.com which summarizes trending AI papers as bullet points. However, I think a chrome extension allows for a much more flexible paper choice, which is really great.

Reddit1990 t1_j84815i wrote

... isn't that the point of the summary at the start of a paper?

Reddit1990 t1_j848225 wrote

... isn't that the point of the summary at the start of a paper?

Iunaml t1_j84cwot wrote

Good enough to know if I have to read it or not. Still ends up disappointed half of the time because an abstract is meant is often a bit clickbaity.

Sola_Maratha t1_j84hy4h wrote

Guys, I tried it,

It is good but not really impressive,

had more expectation,

but ok to say

maxip89 t1_j84n20r wrote

It would add value if you can ask questions about the paper. E.g. some mechanics applied.

[deleted] t1_j84ona4 wrote

[removed]

chillaxinbball t1_j84pek8 wrote

Yes, but what if you need to skim through dozens of papers to find what you need?

radarsat1 t1_j84uw0n wrote

Using ChatGPT to summarize multiple papers and essentially do a lit survey for you is actually a great idea.

sonicking12 t1_j84vdpj wrote

Isn’t what abstract is for?

t35t0r t1_j84vfdj wrote

kagi also has a summarizer that can do pdfs : https://labs.kagi.com/ai/sum

Rieux_n_Tarrou t1_j84vns3 wrote

Serious question: how are you using chatGPT programmatically? As I understand, open AI only has GPT3 accessible via API. ChatGPT is only accessible through chat.OpenAI.com, There is a waiting list to access the chat. GPT API

Rieux_n_Tarrou t1_j84vvxg wrote

Yo dawg I heard you like abstracts so I made an abstract for your abstract

Majesticeuphoria t1_j84xay6 wrote

Just ask ChatGPT for most relevant papers.

DreamWithinAMatrix t1_j854b3f wrote

Doesn't Open AI have an API for direct Chat GPT access?

A_Light_Spark t1_j854o19 wrote

Depends on the paper/authors. Sometimes they reallllyyy try to not tell you what they found or how they found it until you get to the method and conclusion.

import_social-wit t1_j85565r wrote

Nobody likes having the climax spoiled during the first few pages of a story!

A_Light_Spark t1_j8564n5 wrote

Climax my ass, I'm trying to learn, not to cum

SatoshiNotMe t1_j856nri wrote

A lot of people just write “using ChatGPT” in their app headlines when in fact they are actually using the GPT3 API. I will generously interpret this as being due to this genuine confusion :)

endless_sea_of_stars t1_j858dvn wrote

> abstract is meant is often a bit clickbaity.

Had a vision of a nightmare future where papers are written in click bait fashion.

Top Ten Shocking Properties of Positive Solutions of Higher Order Differential Equations and Their Astounding Applications in Oscillation Theory. You won't believe number 7!

ktpr t1_j859ne1 wrote

Imagine that!

ktpr t1_j859ruq wrote

Or, click here to auto-cite this paper to learn more about number 14!

Trakeen t1_j85aa80 wrote

Probably depends on field? I’ve not typically encountered this and most other researchers are going to be looking at dozens of papers at least so they really don’t want to actually have to dig into a paper to find the meat

Trakeen t1_j85angn wrote

Yea that certainly seems useful but it also sounds like a mix of search engine and chatgpt. MSs updates to bing might be able to do that?

[deleted] t1_j85b4hf wrote

[deleted] t1_j85cv32 wrote

[deleted]

A_Light_Spark t1_j85cy4f wrote

Case in point:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3530294/

The title and the abstract are almost disjointed. I come across papers like regularly like maybe 15% of the time?

[deleted] t1_j85flmh wrote

The problem I found with chatgpt and other AI is the word limit. I believe it is 4000 words max. and that includes the summary as well.

If anyone knows a fix, please let me know. In the meantime, I use an AI-tool called scholarcy, but it lacks data to be fed with. I study a subject that is *very* reading-heavy, so I can't simply rely on the abstract, and 100 pages per week/course is mostly too much to handle, while working part-time.

Iunaml t1_j85g996 wrote

Cite one more paper to get 0.15% more chance of being accepted!

Rieux_n_Tarrou t1_j85l08p wrote

Whisper is a voice to text model

Rieux_n_Tarrou t1_j85l5ek wrote

No only for gpt3 models such as davinci

Rieux_n_Tarrou t1_j85le82 wrote

Yes it is confusing and I don’t think openAI is incentivized to clear up the confusion 😄

VelveteenAmbush t1_j85ngvn wrote

Do a two-step. Summarize each paper so the summaries all fit into the context window, then have it compare and contrast.

bik1230 t1_j85oq4m wrote

Is the amount of context ChatGPT can process really enough for a typical research paper?

_poisonedrationality t1_j85uxxg wrote

Do you know the difference between ChatGPT and GPT? Are you being misleading on purpose?

is_it_fun t1_j85wuxs wrote

Right? I can write my own version that just gives you the abstract.

lanky_cowriter t1_j85xpgw wrote

I tried this extension (https://chrome.google.com/webstore/detail/arxivgpt/fbbfpcjhnnklhmncjickdipdlhoddjoh)

It didn't really work for me. It just opens a ChatGPT page in a small window.

Trakeen t1_j863a5t wrote

I think in this specific example it is because they didn’t do any experiments. Conclusion in the abstract is rather superfluous (do more research, ya think?)

Mobile-Bird-6908 t1_j866b6d wrote

Let's start an academic journal named "Trashademia", where we only accept articles with click bait titles. If your research is otherwise not worthy of a publication, we will accept it anyways as long as the content is presented with plenty of humour and trash talk.

Mobile-Bird-6908 t1_j86708s wrote

That is literally how Microsoft is planning to incorporate ChatGPT into Edge. You'll have a side bar where you can talk to ChatGPT about whatever content is displayed on your page.

_sphinxfire t1_j868s22 wrote

Reminder: ChatGPT will routinely leave out aspects of information even if you are giving it the task of re-phrasing what you have said in a different style, if this information is deemed problematic in some way - and it will do this without even telling you.

This effect will also be present - probably even more pronounced - in summaries.

jms4607 t1_j8693ex wrote

YoloV3 would shine

SweatyBicycle9758 t1_j86dchx wrote

Waiting for that

SweatyBicycle9758 t1_j86dh2e wrote

Link?

EuphoricPenguin22 t1_j86kg2j wrote

There's a NPM package that provides an unofficial API for ChatGPT, but you have to jump through all of the hoops to get signed in before it can snag the necessary credentials.

Franck_Dernoncourt t1_j86wojs wrote

Why not impressive?

MattRix t1_j872nhe wrote

Some people also figured out that if you pass in the right model id to the regular GPT API, you get ChatGPT (not sure if this has been blocked since it was discovered).

[deleted] t1_j877f0b wrote

A_Light_Spark t1_j8792en wrote

They did find some correlations. This type of meta analysis is not uncommon nowadays but few avoid answering the question as much as this paper.

Vivid-Vibe t1_j87fdst wrote

Does this have an API endpoint?

starfries t1_j87r1js wrote

I have definitely seen the kind of papers you're talking about, but this one seems fine to me? Granted I skimmed it really quickly but the title says it's a review article and the abstract reflects that.

As an aside: I really like the format I see in bio fields (and maybe others, but this is where I've encountered it) of putting the results before the detailed methodology. It doesn't always make sense for a lot of CS papers where the results are the most boring part (essentially being "it works better") but where it does it leads to a much better paper in my opinion.

humblesquirrelking t1_j87svra wrote

That’s cool 🤙

accidentally_myself t1_j87v60v wrote

...87178291200?

A_Light_Spark t1_j87y33l wrote

True that it's a review, but even reviews tend to draw conclusions, thus the reason for meta analysis.

But yeah, I also prefer to see the results first, no matter how boring.

starfries t1_j87ypnt wrote

Maybe it's a difference in fields. I rarely see people do meta-analysis in ML so it didn't strike me as odd. Most of the reviews are just "here's what people are trying" with some attempt at categorization. But I see what you mean now, it makes sense that having a meta-analysis is important in medical fields where you want to aggregate studies.

muntoo t1_j881naw wrote

Wouldn't hurt if the average paper were written more engagingly than it is now.

Not like

> "This mind-numbing discovery broke the university intranet and gave our Doc Brown lookalike professor a heart attack!",

but something better than

> "The quasi-entropic property of a Clifford algebraic structure has been determined by [7] to induce permutations upon information-theoretic monoidal categories, which are commonly known to be derived from the generalized relaxation of the Curry-Howard-Lambek formulation (Equation 112358) under Noetherian ideal invariance [41], as shown in Figure (lol jk only unsophisticated normies doth require the non-abstract nonsense known outside of Shakespearean tragedies as a figure), and therefore, this provides support for the main result of our paper: that the successor of the Mesopotamian invention 1 = succ(0) in summation with itself is equal to the successor of the successor of the aforementioned invention, which is widely believed to be the first and only even prime, and additionally happens to be a popular choice of base for logarithms in information theory, and furthermore provides a fundamental basis for classical logic which is based on the concept of truth and falsehood, ergo a number of logical states which can be described as the least number of branches under which bifurcation occurs [17,29,31-91]."

> (Dr. Obvious et al. "1 + 1 is usually 2." vixra [eprint]. 2011.)

beautifoolstupid t1_j8862or wrote

The underlying base model (GPT3.5) is the same. ChatGPT is just finetuned for dialogue which is not needed for such apps tbh.

[deleted] t1_j88hzq2 wrote

[deleted]

Rieux_n_Tarrou t1_j89cidt wrote

I think I've seen what you're talking about. But are you sure it's ACTUALLY hitting chatGPT? (should be pretty easy to verify...if it's using something like a headless browser or something)

Rieux_n_Tarrou t1_j89d04y wrote

GPT3.5 is not a model that's available in the API. GPT3 davinci is the most powerful model available.

Case in point: there's a sign-up for the wait-list to get the chatGPT API

nerdymomocat t1_j89f7gp wrote

Try explain paper or elicit for that

Responsible-Item-706 t1_j89gi02 wrote

The summary is generated automatically. There should be a new section on the arxiv paper website.

beautifoolstupid t1_j89ige0 wrote

da-vinci-003 (instructGPT) uses GPT3.5 as mentioned by OpenAI employees on twitter. ChatGPT is just finetuned for dialogue. If you use the playground, there isn’t much difference in the output. In fact, da-vinci is more suited for building applications IMO.

EuphoricPenguin22 t1_j89zm8l wrote

Yep; it used to access chat.openai.com and used Puppeteer (headless Chrome) to semi-automatically traverse the login. They're claiming now that they have some sort of more direct access (not GPT-3 API) and that method is obsolete, so I'm not sure what it's doing now.

Remarkable_Ad9528 t1_j8boasb wrote

Isn't this what Bing is doing out of the box? Same with the browser Opera (they're releasing a new feature called the "Shorten" button which internally calls OpenAI. I'd expect Google to release this as part of Chrome as well.

Other-Economist8538 t1_j8by6ld wrote

No, if you visit a paper detail page(for example, https://arxiv.org/abs/2302.04818), it embeds a section and ChatGPT will start writing. Check the screenshot in the web store page again.

Other-Economist8538 t1_j8byi4v wrote

It uses ChatGPT not GPT. It makes the same API call that you make in https://chat.openai.com/chat site. This project is forked from this repo, and you can check the code.

​

Rieux_n_Tarrou t1_j8do5l9 wrote

Oh ok I was not aware of this.

Thank u for the context

beautifoolstupid t1_j8dxvz1 wrote

No worries 🙏

ntaylor- t1_j8k7v8y wrote

I had the same thought... Im fairly sure any gpt based model can only handle 4k tokens.

HighLevelJerk t1_j8oacj8 wrote

If ChatGPT really was that smart, it would just copy that

saffronanas t1_j9pho4p wrote

Looks like the preview has ended. Where can I use it now?

t35t0r t1_j9ugvnl wrote

there's a message under it now

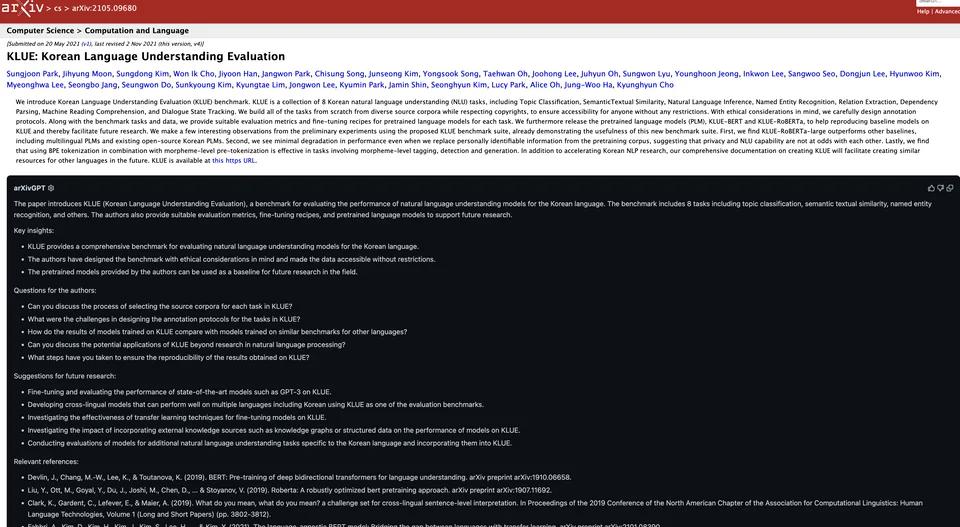

_sshin_ OP t1_j83t0er wrote

https://chrome.google.com/webstore/detail/arxivgpt/fbbfpcjhnnklhmncjickdipdlhoddjoh

To use this extension, simply install it and visit a link to an arXived paper. It will generate a summary of the paper, including a one sentence summary, 3-5 questions for the authors, and 3-5 suggestions for related topics. The query prompt can be customized to fit your specific needs and preferences