Submitted by radi-cho t3_116kqvm in MachineLearning

Comments

currentscurrents t1_j97v09x wrote

In Bulgaria, no less.

impossiblefork t1_j99edtf wrote

That it's Bulgaria is probably why it's possible at all. Notice 'high school of mathematics'.

Some ex-Soviet/ex-Warsaw pact countries have functioning maths education.

radi-cho OP t1_j99eb0v wrote

Thanks for the interest! You can follow me on Twitter: https://twitter.com/radi_cho

radi-cho OP t1_j96ydyf wrote

walkingsparrow t1_j98c2qw wrote

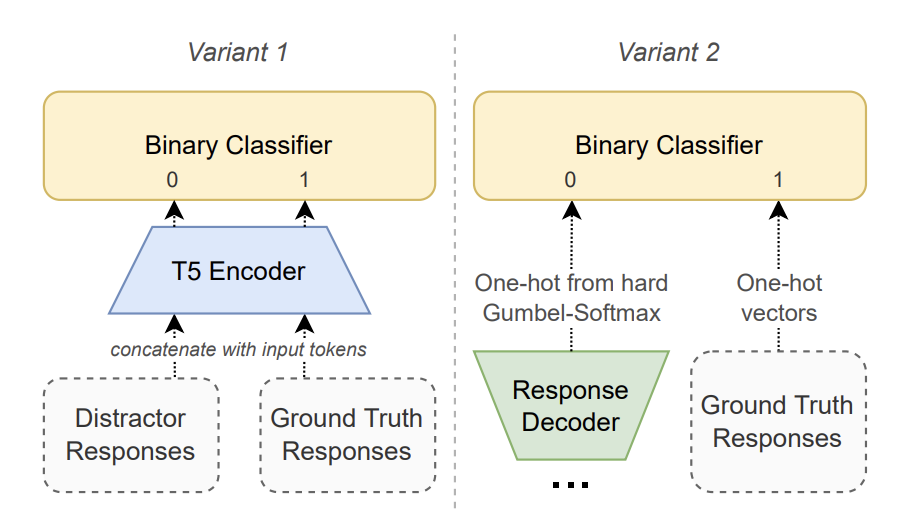

I am a bit confused. So overall, we want to make the generated response to be as close as possible to the ground truth. The paper adds a selection loss that distinguishes the generated response from the ground truth, which would make the generated response as different as possible from the ground truth. How could this help the main task of making these two responses as close as possible?

radi-cho OP t1_j99fh5s wrote

About the intuition that it would produce responses further from the human ones (in fact, we see that for this variant, the BLEU is lower) - in a way, it could work as a regularization to produce more diverse responses and prevent some overfitting. That loss mostly affects the additional head's weights which are removed during inference, but we also multiply it by an optimal constant to be sure it doesn't affect the whole architecture too much. I've sent you a PM if you wish to receive some more details or empirical insights.

walkingsparrow t1_j9b7j3d wrote

I think I understand now. Thanks for the explanation.

__lawless t1_j96ycry wrote

Link?

radi-cho OP t1_j96yj4l wrote

Just linked it in a top-level comment.

[deleted] t1_j9a56tc wrote

[removed]

Cheap_Meeting t1_j972cc6 wrote

First author is a high-school student. Impressive.