Submitted by balancetheuniverse t3_11rc0wa in dataisbeautiful

Comments

PirateMedia t1_jc95t28 wrote

Check out Khan academy, they just showed how they are going to implement gpt4 into their website.

Of course not the same as it being part of the normal school curriculum, but oh well we are past that aren't we?

GamingWithShaurya_YT t1_jc9vwth wrote

oo I already use the plateform

this could be sick

Hematomawoes t1_jcd9wya wrote

That’s awesome! I’ll have to look into it!

kurtuwarter t1_jc9wymx wrote

I hate the way education is procured, it doesn't ask of students to understand how to use educational materials in ways that dont involve students just ripping off lectures/last year's works and turning it in as-is.

Super frustrating, but interested in seeing it lead into Idiocracy.

Medium-Wolverine-211 t1_jc8uzlf wrote

Everything will balance out.

[deleted] t1_jc9ei7n wrote

[removed]

Brilliant_Sir_8660 t1_jc9p7md wrote

Buddy, as an educator, you could change that

Hematomawoes t1_jcd9q6x wrote

Weird that you’re assuming I’m not already trying to change that. I’m pointing out that by the time the students get to me they’ve already been introduced to these things so it’s now about trying to reteach these tools.

Brilliant_Sir_8660 t1_jcmblua wrote

That doesn’t really make any sense. These tools are like less than 6 months old, not enough time has gone by to be talking about how they were introduced bad and need to be unlearned, now is the perfect time to avoid that.

golamas1999 t1_jcmjuno wrote

It’s already here. Learn to adapt or you will be left behind like it or not.

Hematomawoes t1_jcyxhnt wrote

I have students who, last semester, were given instruction by the college advisors, enrollment specialists, and librarians on how to use it for “searches.” So yes. There is enough time for college students to have already been introduced to this in a bad way and need to be retrained/retaught how to effectively use it without copy/pasting output.

[deleted] t1_jc9czb7 wrote

[deleted]

[deleted] t1_jc9tqme wrote

[removed]

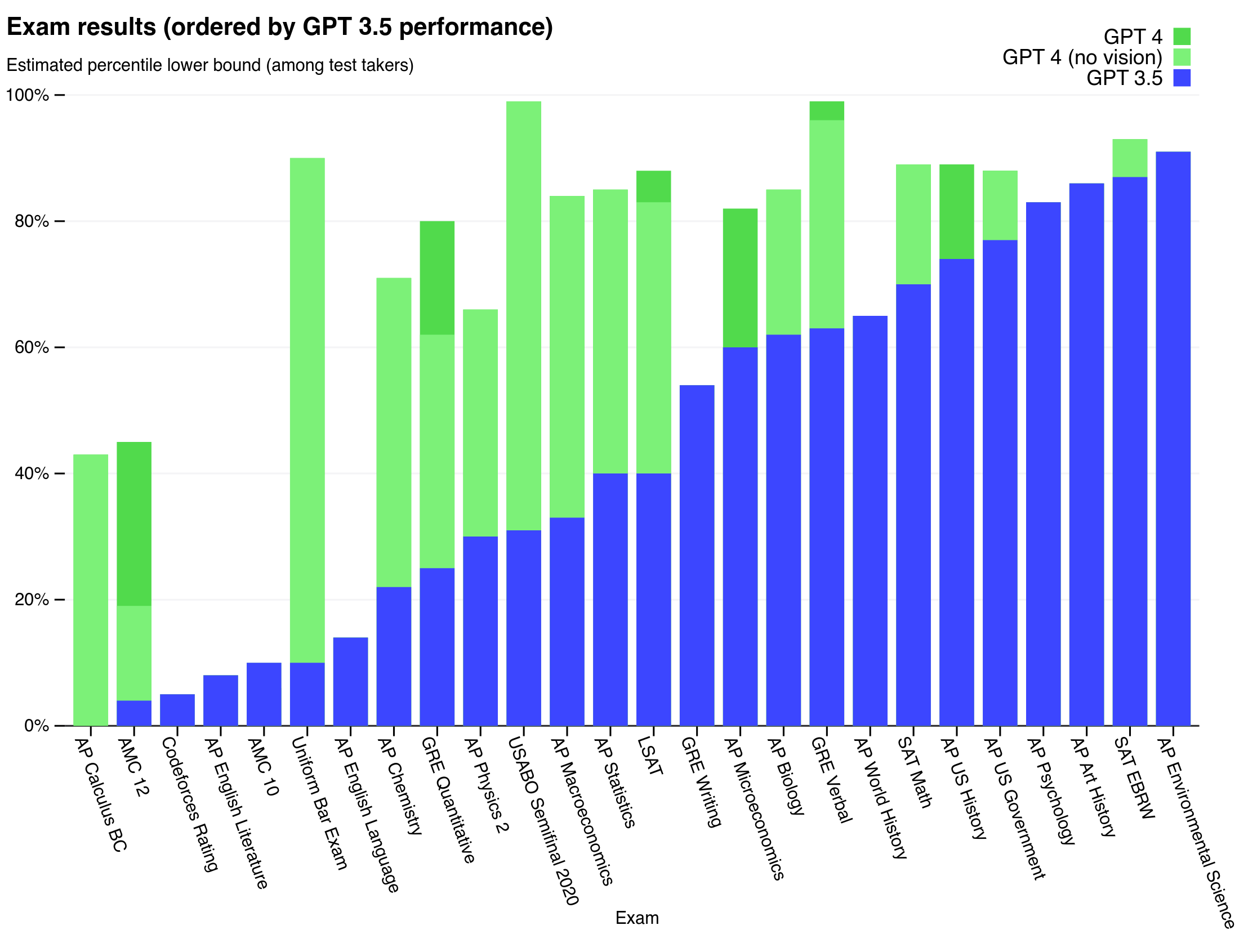

balancetheuniverse OP t1_jc7ocpg wrote

Data found on Page 6 https://cdn.openai.com/papers/gpt-4.pdf

superkeer t1_jc8rqq7 wrote

That is an unbelievably fascinating document. Thank you for linking.

wishusluck t1_jc8k1m7 wrote

I, for one, always respected my computer overlords...

fuck_all_you_people t1_jc80mls wrote

4 did worse than 3 in psychology and world history, we are making a redneck 2.0

Jackdaw99 t1_jc8rj7j wrote

How can it be so bad at calculus? It's a computer, for God's sake.

Empty_Insight t1_jc8xajq wrote

It's an algorithm trained to mimic human output from a prompt. It's only as good as what it was trained on, and people in general suck at hard science and advanced math.

Take, for example, how people 'tricked' ChatGPT into telling them how to make meth. It actually would give them different answers based on how they asked the question, and just in the off-chance that isn't obvious, is not how chemistry works. Also I never saw it give an answer that was actually 'right' in terms of organic chemistry, either for pure pharmaceutical grade methamphetamine (aka Desoxyn) or street meth. It sure seemed right if you didn't understand the actual chemistry. It seemed convincing, even though it was wrong- same thing with calculus, I'm guessing.

Friendly reminder Wolfram Alpha exists if someone is having trouble with calculus. It not only solves the problem, but it shows you how it solved it step-by-step so it's a good study tool too.

Denziloe t1_jc9v9p2 wrote

>It's an algorithm trained to mimic human output from a prompt.

This is an over-simplification, the whole deal with ChatGPT and GPT-4 is that they weren't just trained on huge quantities of unlabelled human text, they were also specifically trained to be "aligned" to desirable properties like truth-telling.

Jackdaw99 t1_jc9244f wrote

But surely it must rate the sources it uses. Besides it seems to be very good at SAT math, which is obviuously easier, but would rely on the same mimicry.

thedabking123 t1_jc93iop wrote

that's not the way that the system works.

You're using symbolic logic, its thinking is more like an intuition- a vastly more accurate intuition than ours, but limited nonetheless.

And the kicker? Its intuition of what words, characters etc. you are expecting to see. It doesn't really logic things out, it doesn't hold concepts of objects, numbers, mathematical operators etc.

It intuits an answer having seen a billion similar equations in the past and guesses at what characters on the keyboard you're expecting to see based on pattern matching.

Jackdaw99 t1_jcawuaf wrote

I can tell your reply wasn't written by GPT. The possessive "its" doesn't take an apostrophe....

jk

thedabking123 t1_jcbbado wrote

lol- it may make the same mistake if enough people on the internet make the mistake... OpenAI uses all web data to train the machine.

Empty_Insight t1_jc94vo9 wrote

Even if the source is 'right,' it might not pick up the context necessary to answer the question appropriately. I would consider the fact that different prompts resulted in different answers to what is effectively the same question might support that idea.

Maybe ChatGPT could actually give someone the answer of how to make meth correctly if given the right prompt, but in order to even know how to phrase it you'd need to know quite a bit of chemistry- and at that point, you could just as easily figure it out yourself with a pen and paper. That has the added upside of the DEA not kicking in your door for "just asking questions" too.

As far as calculus goes, I can imagine some of the inputs might be confusing to an AI that is not specifically trained for them since the minutiae of formatting is essential. There might be something inherent to calculus that the AI has difficulty understanding, or it might just be user error too. It's hard to say.

Edit: the other guy who responded's explanation is more correct, listen to them. My background in CS is woefully lacking, but their answer seems right based on my limited understanding of how this AI works.

fortnitefunnies3 t1_jc9cvp4 wrote

Teach me

Hypo_Mix t1_jc9nc9u wrote

Its a language model, not a calculator. Its like trying to solve calculus by reading a calculus professors biography.

Jackdaw99 t1_jcaxztr wrote

I would imagine the language model spihons questions off into a calculator. It would be pretty easy, considering the infinite nature of integers, to give it a simple arithmetic problem that has never been written down or even devised before. Say, "What's 356.639565777 divided by 1.6873408216337?" I would be very surprised if it didn't get this sort of thing right.

Follow-up: I just tried that calculation on ChatGPT and it got it...wrong. Twice. With different answers each time. Though it was close...

That's bizarre to me, since it couldn't have used a language model to calculate that, and in fact it explicitly told me it was sending the calculation to Python. So I don't know what's going on here.

torchma t1_jcbubdj wrote

I don't get your comment. You know it's a language model and not a calculator and yet are surprised that it got a calculation wrong? And no, it doesn't send anything to anything else. It's a language model. It's just predicting that the sequence of words "I'm sending this calculation to python" is the most likely sequence of words that should follow.

Jackdaw99 t1_jccaord wrote

That doesn't make sense to me. It would be the easiest thing in the world to build a calculator into it, have it send questions which look like basic arithmetic in, and then spit out the answer. Hell, it could build access to Wolfram Alpha in. Then it wouldn't make basic mistakes and would much more impressive. And after all, that's what a person would do.

Moreover, if it doesn't have the ability to calculate at all, how did it get so close to the answer when I fed it a problem which, I'm pretty sure, no one has ever tried to solve before?

And finally, how did it do so well on the math SATs if it was just guessing at what people would expect the next digit to be?

I'm not saying you're wrong, I'm just baffled by why they wouldn't implement that kind of functionality. Because as it stands, no one is ever going to use it for anything that requires even basic math skills. "ChatGPT, how many bottles of wine should I buy for a party with 172 attendees?" I'm not going to shop based on its answer.

Maybe this iteration is just further Proof of Concept, but if so, all it proves is that concept is useless for many applications.

torchma t1_jccg5ej wrote

Because basic calculation is already a solved problem. OpenAI is concerned with pushing the frontiers of AI, not with trivial integration of current systems. No one is going to use GPT for basic calculation anyways. People already have ubiquitous access to basic calculators. It's not part of GPT's core competency, so why waste time on it? What is part of GPT's core competency is an advanced ability to process language.

That's not to say that they are necessarily ignoring the math problem. But the approach you are suggesting is not an AI-based approach. You are suggesting a programmatic approach (i.e. "if this, then do this..."). If they were only concerned with turning ChatGPT into a basic calculator, that might work. But that's a dead-end. If OpenAI is addressing the math problem, they would be taking an AI approach to it (developing a model that learns math on its own). That's a much harder problem, but one with much greater returns from solving it.

Jackdaw99 t1_jcclxlr wrote

If they're going to release it and people are going to use it (whatever the warnings may be), I don't think it's trivial at all. Basic math factors into a significant percentage of the conversations we have. And it's certainly not trivial to be able to tell when a question needs it.

I'm not calling for it to be turned into a basic calculator: I'm asking why they don't recognize that a portion of the answers they provide will be useless without being able to solve simple math problems.

They could certainly build in a calculator now and continue to explore ways for it to learn math on its own. I just don't understand why you would release a project that gets so much wrong that could easily be made right. (And nothing I've read on it, which is a non-trivial amount, mentions that it can't (always) calculate basic arithmetic.) If I can't count on the thing to know for sure the answer to a basic division problem, I can't count on it at all -- at which point, there's no reason to use it.

torchma t1_jccsuru wrote

>I'm asking why they don't recognize that a portion of the answers they provide will be useless without being able to solve simple math problems.

?? They absolutely recognize that it's not good at math. It's not meant to be good at math. If you're still using it for math despite being told it's not good for math and despite the obvious results showing it's not good at math, then that's your problem, not theirs. That it's not good at math hardly negates its core competencies, which are remarkable and highly valuable.

>They could certainly build in a calculator now

What? That would be absolutely pointless. They might as well just tell you to use your own calculator. In fact, that's what Bing Chat would tell people if you asked it to do a math problem back before they neutered it.

a-ha_partridge t1_jc9omrp wrote

If it’s anything like me it is still trying to learn LaTex syntax.

HW90 t1_jc9h9hu wrote

More specifically, how can it be so good at SAT Math but not AP Calculus?

Facewithmace t1_jc9tzff wrote

SAT math is mostly just Algebra 1 with a few basic geometry and prob & stats questions thrown in.

torchma t1_jc86f01 wrote

I thought it was supposed to be better at coding. Perhaps codeforces is more about advanced logic than coding?

overflowingsunset t1_jc9gn2p wrote

Next they should take a stab at medical doctor and nursing licensure exams. They test knowledge, critical thinking, good judgement, ethics, etc. Probably somewhat comparable to the bar exam.

dentist_clout t1_jc9uvpq wrote

As somebody currently studying for board exams it would be a lot more difficult for the AI to get the question right. Questions are more integration based, with pt med history, radiographs, meds…

There can be sections were it would excel if it’s straightforward pharmacology or medicine.

vtTownie t1_jca7170 wrote

It would actually do better on the prior I expect; it’s a language model so it’s designed and trained to operate based on what the expected human language output would be rather than crunching numbers. Look at the AP Chem and Calc performance

[deleted] t1_jc7n753 wrote

[removed]

-ghostinthemachine- t1_jc9hnmq wrote

How many more iterations until it can generate software and models that are better than what we have built for it? I think we're in the final 20 year stretch.

Sufficient_Sale_4507 t1_jc9kcta wrote

aww gpt-4 and i have matching gre scores

[deleted] t1_jc9l0nz wrote

[deleted]

ArcticBiologist t1_jc9zs3b wrote

Those English literature majors will end up having the last laugh...

qroshan t1_jcit6eb wrote

No they won't. It probably just means English lit grading is arbitrary.

venuswasaflytrap t1_jc9ztkh wrote

Can't see the difference between the green and the yellow

secret58_ t1_jca1ryf wrote

What level are those tests on?

Adsequalbads t1_jcacu5u wrote

How did it do on the “take over skynet test”?

amoral_ponder t1_jc9kipe wrote

Holy! Environmental Science is confirmed certified wibbly wobbly hogwash, isn't is?

wanmoar t1_jc9ysrx wrote

Or there’s just more of it online and available to GPT to absorb because it’s fairly new.

The_Gordon_Gekko t1_jc969y1 wrote

u/balancetheuniverse are you good with me sharing this over LinkedIn? Of course a call out as well.

adamr_ t1_jc9hudm wrote

OP literally posted a link to the research paper they took this graph from…. Lol

Hematomawoes t1_jc8j8v9 wrote

As an educator, I hate the way students are introduced to GPT. They don’t understand how to use it in ways that don’t involve the software just generating content for you and turning it in as-is. Super frustrating but interested in seeing where it goes.